Apache Airflow for scheduling and monitoring ETL and ML model scoring

Having continuous and smoothly running data pipelines and ML model scoring processes is key to delivering timely insights and predictions for our customers. Although cron jobs are easy to create, as pipelines grow they become difficult to orchestrate, maintain and manage. Airflow which uses Directed Acyclic Graphs (DAGs) to manage workflow orchestration provides an easy way to define tasks and dependencies, and then Airflow takes care of timely execution.

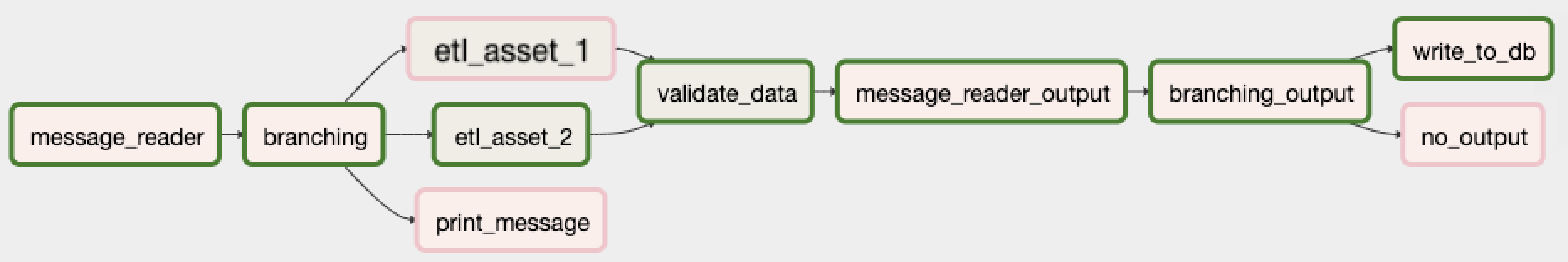

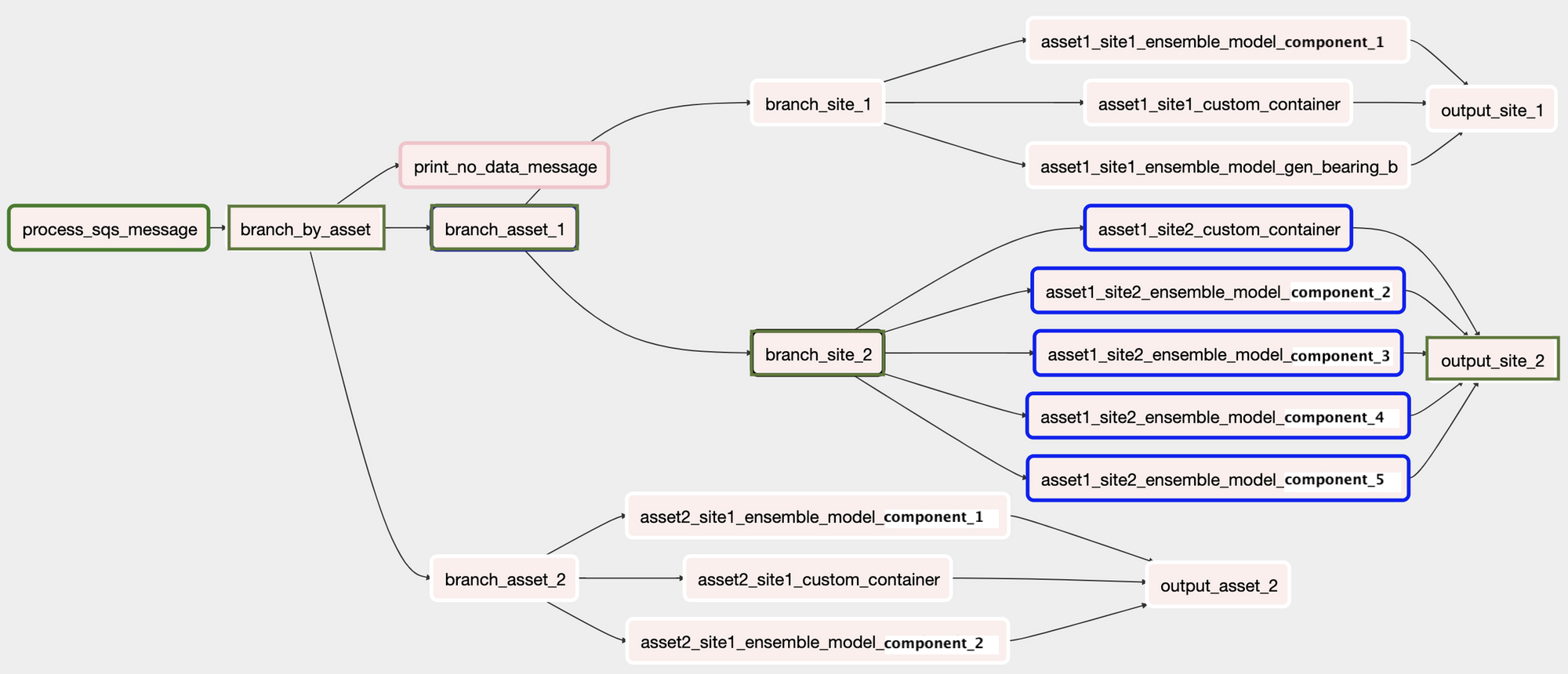

While getting started with Airflow is easy and the large number of operators provide great flexibility, they also introduce dependencies that are hard to debug for developers. Experiencing these issues early on and learning from what others said (here and here) we decided to stick to few operators for all our needs. Most of our DAGs use only Python, Branch and the ECS Operator. Any task requiring more than basic processing is containerized and run on Elastic Container Service (ECS) using the ECS operator. Below is an example DAG of how using these 3 operators we are able to run a ML model scoring process and today we have 150+ containers being run and managed by Airflow.

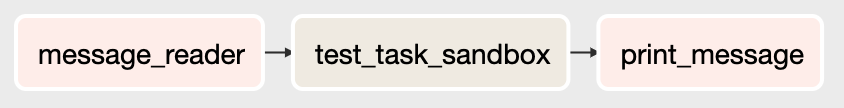

Below is a sample code snippet for simple DAG that is trigged via a SQS message on file being dropped into S3 bucket which is then processed using ECS operator